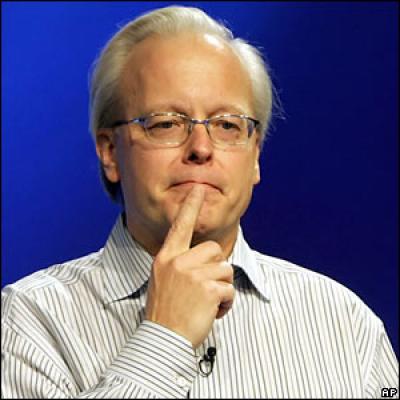

Ray Ozzie, Microsoft’s chief software architect, took time out of a very busy schedule to chat with eWEEK Senior Editor Darryl K. Taft at the Microsoft Professional Developers Conference in Los Angeles. Ozzie, who sets the tone for Microsoft’s overall software strategy, finally got the opportunity to unveil part of his grand design for the future of Microsoft, in the form of Windows Azure – Microsoft’s cloud operating system.

Ozzie spoke with eWEEK about Azure, but also about a variety of subjects, including open source, interoperability, software modeling and domain-specific languages. In making his mark with Azure, Ozzie also signals to the world that he is in charge.

How much of Microsoft’s increased interest in interoperability and support for open source comes from you?

It’s hard to personalise it like that. Like in any big organisation, the way you do any kind of change management is my simple rule of thumb: You say something, you do a symbolic public hanging of something, and then you have to find somebody at the edge who’s actually going to be the change agent who drives things through. You just can’t make change happen when you’re at that level, that many levels of abstraction removed from where the work actually gets done.

So, have I been a proponent? Absolutely. Absolutely. And not in interoperability for interoperability’s sake – interoperability because that’s what customers do, that’s what customers want, that’s what customers need.

There was an allergy in some sectors within Microsoft interop because they thought it was a code word for “do what people don’t want us to do. … Do what people are telling us to do, and we’re required to do.” But coming from my background, it’s what people do, it’s what they need. And we should be comfortable in our own skin with who we are. Like, what’s the big deal, why be so insecure? We are Microsoft; it’s OK. We can actually have connections.

So, yeah, I’ve tried to set the tone. I’ve done a couple of things internally that it would be the inverse of the public hanging. It would be a lot of attention to something that is doing something good. But really it’s just mainly the people in Bob’s [Muglia, senior vice president of Microsoft’s Server and Tools Business] group who have just started to do that.

One technology a lot of people look to and call for more openness on is Silverlight. Do you have any plans to open up Silverlight in any way?

Like what kind of openness are you talking about?

Well, at the very least putting it under the OSP [Microsoft’s Open Specification Promise].

They can just go get the Mono source code and start playing with that. Or Moonlight. That’s Miguel’s [de Icaza, head of the Mono project and the Moonlight effort to run Silverlight on Linux] project. They don’t need our source code; they’ve got theirs.

I think people, including Miguel, feel like there should be more from Microsoft.

OK. I’ll accept what you said. Honestly, I haven’t heard that, but we travel in different circles. But are you saying – was the question, Will we consider open-sourcing it?

That is a question. I think I know the answer, and the answer is no.

I’ll just give you my perspective. If there was a benefit to open-sourcing something, a benefit, like a customer benefit, then I don’t see why we wouldn’t think about it. I mean, we open-sourced a lot of the .NET Framework.

To me it’s a very pragmatic choice. I think any company these days, any technology provider, even Microsoft, has to find the right balance of being a contributor and user of open source. If you look at what Apple has done with WebKit – actually if you look at Apple’s entire stack, it’s masterful in its use of different licenses and different code.

Microsoft, we make our money on proprietary software, but there are areas where interoperability is important, there are areas where cross-platform implementations that we might not pay enough attention to might be important that we would consider it. But I can’t say in the Silverlight case. I just hadn’t heard it. I mean, I think on the Mac we should be doing such a bang-up job that nobody would care about open source on the Mac. We’re doing it for Nokia. I think we’re doing it for Windows. So, that leaves Linux.

I’m interested in tools and application development. So I’m interested in Oslo and what the Microsoft modeling strategy means for the Windows Azure cloud environment. And I’m also interested in your thoughts on modeling altogether.

In the abstract I’ll just say the quest, the ultimate goal, is that we push the limits as much as we can to see how much we can abstract into modeling from the entire life cycle from the analyst to the developer to the person who understands … from the person who understands the business problem to be solved to the developer who will probably in some cases have to wrap it with code, with some procedural code, to how it gets deployed, and to how it gets managed in an operational environment.

The Oslo folks have been at the leading edge of architecture and starting with a great conceptual vision, and building out from that conceptual vision.

If you look at the … I’m kind of giving you the sausage factory view here. If you look at Azure, when you have a chance to actually open the hood, what you’ll see is it’s so fundamentally model-driven in terms of how it operates, and those guys have been working very, very closely together. They aren’t syntactically mapped yet, but it doesn’t matter, because Don’s [Box, a Microsoft distinguished engineer] got some grammar that will map to it or whatever.

But the point is in essence what Azure does is it takes a service model, a very robust service model, that basically says there are switches, there are multiplexers, there are load balancers, there are Web front ends; you just define your roles, you define the relationships between those roles, you say how many of each in a balanced system, you express maximums, minimums, you say I want this much affinity between these roles, I want this much of the replicas of this role, I want this much – I don’t know what the word is for anti-affinity – I want these many to be in a different data centre far away. Like you have two-thirds of them close to each other and have one-third of them far away. It’s a fairly sophisticated description so that the service fabric, so that the operational fabric can take your code and just do it.

We’re learning a lot. We’re going to learn a lot over the next year from people actually playing with it, but taking it up to that level, you know, you could never do it without modeling. That’s just the way it is.

Could we pepper that with procedural … you know, letting people add code to make intelligent decisions about the replication? Yeah, we absolutely can and we will, but right now it’s about as purely declarative as you can get.

But the goal is to get the whole tool chain, starting all the way at the business analyst and all the way through, so we’ll see. We’re at the point now where we’ve at least got a common repository between a few of the projects that are going on in-house, and we’re moving forward.

It’s a little bit difficult. … We’ve encountered some challenges on the – not to digress too much – but on the Azure side because we have a fundamental, very fundamental requirement of the system that there be no single point of failure, and the fabric controller itself, if it uses a database as a repository, it really has to. There’s too much complexity there for it to rely on, unless it’s a packaged subsystem on one node. Because it’s in control; the question is if multiple … if the fabric servers, how does it know which direction …

What’s your stance on DSLs [domain-specific languages] and their effectiveness?

Well, I’m not the expert on that. I just want to say that. I’ve talked to Charles Simonyi on that. And, you know, if you really want to write a good article about tha – and it has nothing to do with Microsoft technologies. … But this is a guy who’s truly into this, and he’s a lot more in touch with customers. He’s shown me examples of DSLs that have been quickly whipped up for a specific business customer, and it works. You know, once they get exposed to it, it’s extremely productive. But I have not in my career been exposed enough to those situations where I could actually draw a pattern to see how much of this is going to be used by the programmer who’s closest to the business person, or really by the business person. I just can’t tell.

It’s like I don’t want to get trapped in the same thing when we were in the 4GL era of, well, everybody is just going to draw these flowcharts, and it’s all going to be goodness; it just didn’t work out that way.

That’s why I was curious … because of that potential effect.

I’ll just say it this way. I think there’s a … in terms of my leadership style I keep on trying to draw out of these people who build these things under Bob [Muglia] to show me the concrete connections as to how to get from where you are to where you will be, so that you don’t end up building a big sandbox that nobody ends up using. There’s that danger with any brilliant engineers and designers.

But … I absolutely believe in the value of modeling, unquestionably believe in the value of modeling.

Yeah, and that actually was where I was headed with that question – the whole sandbox issue.

You’ll definitely see some of that. You know, I’m not going to deny that. But they wouldn’t be allowed to do this unless there weren’t also … you may not be aware of this. All the way into the field there are connections. David Vaskevitch has a group that in essence does briefings with enterprise customers, shows them the most recent stuff, and tries to do some level of plausibility connection between what people are actually doing and what’s being built. The connection is not completely there yet, and in PDC you’re not going to see that.

But if it works as planned, it seems like it could be a big shortcut compared with what some others might be doing.

Well, you’re more familiar – I’m being very straight – you’re more familiar with that than I am at a detail level. I’m just trying to make sure that the two connections that I can make happen, happen. One is to make sure that they keep staying in touch with the customer, and one is to make sure that for the people who are actually racing to a solution like the Azure … they use it very pragmatically. They weren’t worrying about the theory of modeling and all the generality of it. They did it because they needed it and it works; it’s the best way of doing it. And connecting that power back into those tools at the same time. I’m feeling good that we’re going in the right direction.

I want to go back to Azure. Could you give me a little of the concept of how it came about, and from there how it blossomed, and how you guys picked the development team and so on?

I’ll just say in ’05 it was kind of an important year – it was the first year I was there – I wrote up some papers and presentations that ended up basically taking the viewpoint that you saw here today, which is there are back-end things that are happening, there are front-end things that are happening. The right way to do things is to go to the groups and just basically say will you do it. The problem is that at that moment in time we were in the middle of Vista and in the middle of Office 200 – lots of developers were just heads down.

And so what was recommended and what we ended up doing was spending – you know, just creating new groups, one that ended up being Live Mesh, one that ended up being this thing, this Red Dog project that ended up turning out this way.

Amitabh Srivastava, who was up yesterday, was the kind of founder, Dave Cutler co-founder of that group, and they built it up from there. And I seeded them with the vision, and then they went and they sceptically thought about it, and then they went on their own reality check tour of visiting teams and visiting the data centres, and then visiting the services teams.

Because the services teams at Microsoft were kind of off to the side, they weren’t the real men. They didn’t develop OSes, they just ran those wimpy things like MSN. But they went out there and they said, “Wow, this is like a whole different world. There is a different OS out there that should be there, and we’re wasting money, and we’re this and we’re that.”

They found things like some degree of excess capacity of machines that were powered on waiting to be provisioned. Why were they powered on even though they weren’t being used? Because they needed their OS updates to be ready in case they were needed – they were just wasting power.

And energy is a big deal these days, and so basically they just said, “Look, we can make a real difference here across a broad variety of properties. And the more properties that we’re running, the more we can save”. Because every property we had, had its own stack of machines that was waiting for spare capacity from itself, and each one had slightly different configurations.

They saw it as a huge innovation opportunity, and so I’m just really pleased that they took it on.

Deliveries of Telsa's 'bulletproof' Cybertruck are reportedly on hold, amid user complaints side trims are…

New feature reportedly being developed by Apple for iOS 19, that will allow AirPods to…

Binance BNB token rises after WSJ report the Trump family is in talks to secure…

After failed Amazon deal, iRobot warns there is “substantial doubt about the Company's ability to…

Community Notes testing across Facebook, Instagram and Threads to begin next week in US, using…