|

Getting your Trinity Audio player ready...

|

Meta Platforms is once again turning to the use of facial recognition, in an effort to protect users from celeb-bait ads and enable faster account recovery.

Meta announced the move this week, after it said that scammers are “relentless and continuously evolve their tactics to try to evade detection, so we’re building on our existing defenses by testing new ways to protect people and make it harder for scammers to deceive others.”

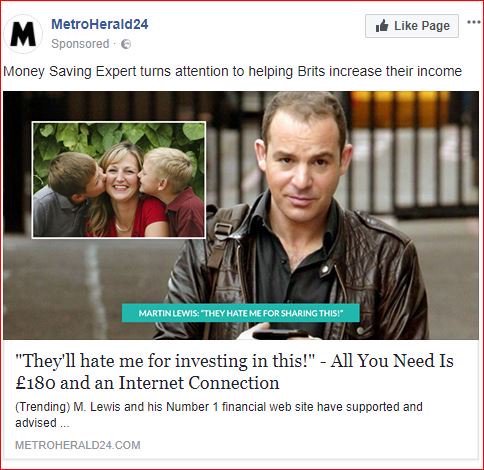

Celeb-bait ads are adverts that fraudulently use celebrities in adverts – typically to promote investment schemes and cryptocurrencies.

Celeb-bait ads

Martin Lewis, the founder of MoneySavingExpert famously sued Facebook in April 2018 after the social networking firm had allegedly refused to stop publishing scam financial adverts that featured his “picture, name and reputation.”

Lewis said he had been fighting with Facebook for over a year before he began the lawsuit to get them to stop publishing adverts for scams, that utilised his name and picture. One lady reportedly lost £100,000 to the scam adverts.

In January 2019 Lewis dropped its High Court lawsuit against Facebook, after it agreed to introduce a scam ads reporting button, and make a financial donation (Facebook agreed to donate £3m to Citizens Advice).

In November 2021 Meta closed down the use of facial recognition on its platform, after a decade of of privacy concerns over its use on Facebook.

Facial recognition

But now on Tuesday, the social media giant said it is testing the service again as part of a crackdown on ‘celeb bait’ scams.

“We know security matters, and that includes being able to control your social media accounts and protect yourself from scams,” it stated. “That’s why we’re testing the use of facial recognition technology to help protect people from celeb-bait ads and enable faster account recovery. We hope that by sharing our approach, we can help inform our industry’s defenses against online scammers.”

“Scammers often try to use images of public figures, such as content creators or celebrities, to bait people into engaging with ads that lead to scam websites, where they are asked to share personal information or send money,” said the platform. “This scheme, commonly called ‘celeb-bait,’ violates our policies and is bad for people that use our products.”

“Of course, celebrities are featured in many legitimate ads. But because celeb-bait ads are designed to look real, they’re not always easy to detect,” it added.

“Our ad review system relies primarily on automated technology to review the millions of ads that are run across Meta platforms every day,” it said. “We use machine learning classifiers to review every ad that runs on our platforms for violations of our ad policies, including scams. This automated process includes analysis of the different components of an ad, such as the text, image or video.”

“Now, we’re testing a new way of detecting celeb-bait scams,” it stated. “If our systems suspect that an ad may be a scam that contains the image of a public figure at risk for celeb-bait, we will try to use facial recognition technology to compare faces in the ad to the public figure’s Facebook and Instagram profile pictures. If we confirm a match and determine the ad is a scam, we’ll block it. We immediately delete any facial data generated from ads for this one-time comparison, regardless of whether our system finds a match, and we don’t use it for any other purpose.”

Meta reportedly plans to roll out the trial globally from December, excluding some large jurisdictions where it does not have regulatory clearance such as the UK, the European Union, South Korea and the US states of Texas and Illinois.