US Agency Deepens Tesla Autopilot Investigation

Federal agency upgrades investigation into Tesla’s Autopilot, in what is being touted as the first step before issuing a recall

Tesla is facing an upgraded investigation of its Autopilot driving assistance system, by the Federal vehicle safety regulator in the US.

The National Highway Traffic Safety Administration (NHTSA) announced this week that it is upgrading its preliminary investigation to an “engineering analysis”, which is the step taken before the agency determines a recall.

It was back in August last year when the NHTSA launched a formal investigation of Tesla’s Autopilot, after a series of high profile fatal crashes.

Autopilot investigation

It should be noted that the NHTSA’s investigation only covers 2014-2022 Tesla Y, X, S and 3 vehicles, of which it estimates 830,000 are affected.

An official investigation had been on the cards for a while now. In November 2020, the NHTSA began a public consultation on ways to improve the safety of ‘self-driving’ cars.

Then in June 2021, the NHTSA ordered all car makers equipping their vehicles with automated driving systems, to begin reporting crashes so the US regulator could “collect information necessary for the agency to play its role in keeping Americans safe on the roadways.”

Two months later it launched its official probe into Tesla’s Autopilot system.

Then in October 2021 the agency asked Tesla why it had not issued a recall to address software updates made to Autopilot, designed to improve the vehicles’ ability to detect emergency vehicles.

In May 2021 Tesla had made a change to its Autopilot self-driving technology, dropping the use of radar.

Instead Tesla will utilise a camera-focused Autopilot system for its Model 3 and Model Y vehicles in North America.

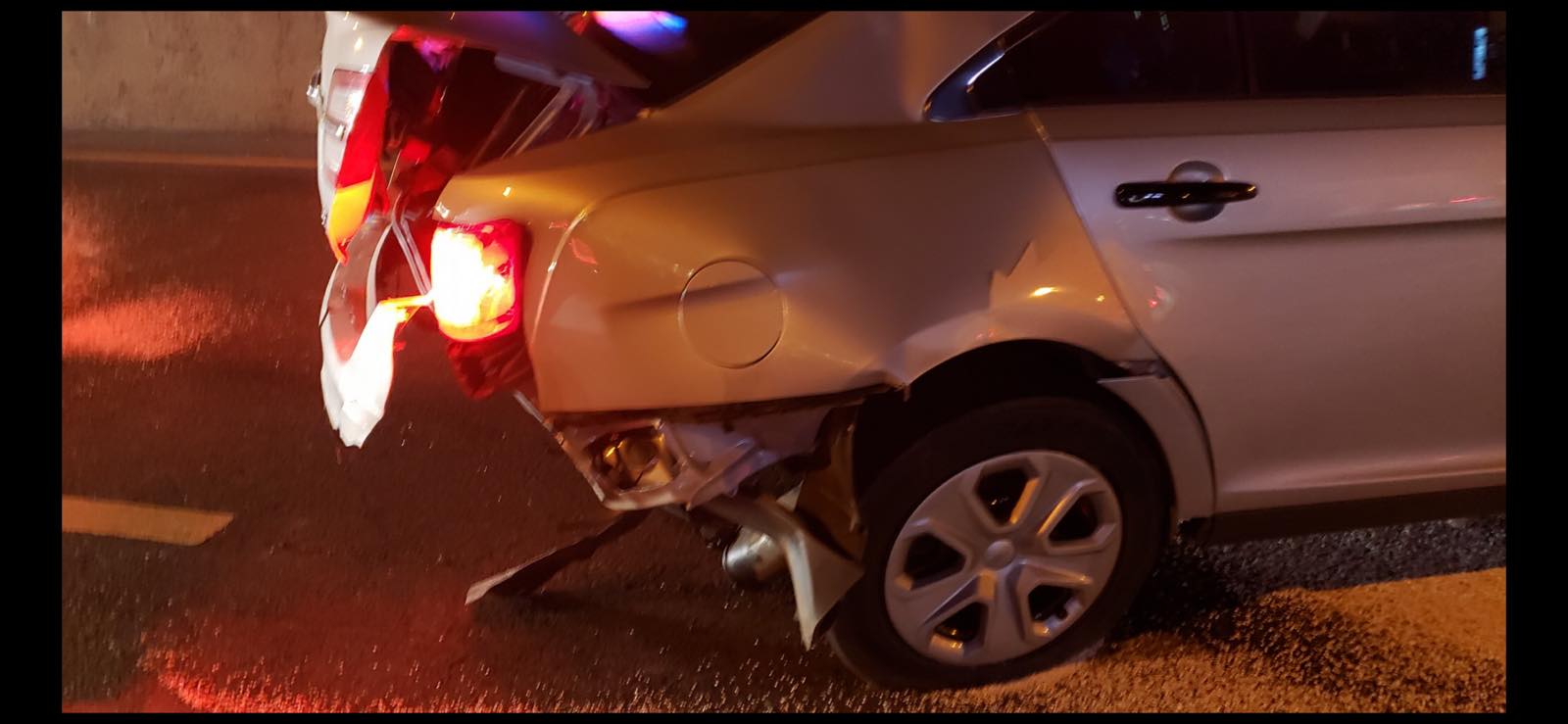

High profile crashes

During the last five years, Tesla EVs have been at the centre of a number of incidents surrounding the use of automated driving systems, including multiple accidents and indeed fatalities.

But it is Tesla’s crashes into emergency vehicles that is especially concerning the US authorities.

In December 2019 for example, a driver was charged after he placed his Tesla Model 3 on autopilot so he could check on his dog in the back seat.

Unfortunately, the Model 3 (whilst in its autonomous driving mode) failed to avoid crashing into a stationary police car of the Connecticut State Police, which had its blue flashing lights on, as it attended to a broken down car.

In September 2021, five police officers in Texas sued Tesla after they, and a police dog, were ‘badly injured’ after an unnamed driver crashed his Tesla Model X into the back of two parked police cruisers at 70mph (112kph), after they had stopped to investigate a fourth vehicle for suspected narcotics offences.

The driver was drunk and had used his Tesla to drive him home, when it crashed and wrecked the police cars, and left the police officers with “severe injuries and permanent disabilities.”

An additional Tesla accident resulted in the first-ever US case of an individual being charged with vehicular manslaughter in January 2022, when their Model S went through an intersection with Autopilot engaged, striking a Honda Civic and killing two people.

Upgraded investigation

The NHTSA said that its data gathering has shown there has been eleven crashes with first responder vehicles, but six more incidents were subsequently identified.

In all, the agency is investigating sixteen “first responder and road maintenance vehicle crashes” and 106 additional accidents that followed the same pattern but didn’t involve emergency vehicles.

“Accordingly, PE21-020 is upgraded to an Engineering Analysis to extend the existing crash analysis, evaluate additional data sets, perform vehicle evaluations, and to explore the degree to which Autopilot and associated Tesla systems may exacerbate human factors or behavioral safety risks by undermining the effectiveness of the driver’s supervision,” the agency said.

“In doing so, NHTSA plans to continue its assessment of vehicle control authority, driver engagement technologies, and related human factors considerations,” the agency said.

NHTSA began a separate investigation in February 2022, looking into a separate batch of complaints that have been filed against Tesla vehicles that suddenly brake at high speeds, otherwise known as “phantom braking.”

Careless drivers

But is fair to say that it seems on the surface that Tesla’s Autopilot system has been abused and misused by drivers on many occasions.

In September 2020 for example a Tesla driver in Canada was charged when police found the driver and his passenger sleeping in fully reclined seats, whilst the Tesla drove along a highway in autonomous mode at speeds of more than 140kph (86mph).

![]()

Then in May 2021 the NTSB issued its preliminary findings of a Tesla car crash in Texas that killed two men, who local police had said were allowing the car to drive itself on Autopilot whilst there was no one in the driver’s seat.

Elon Musk was quick to cast doubt on that law enforcement theory, when he said data recovered so far showed Autopilot was not enabled.

However engineers at influential US magazine Consumer Reports (CR) then demonstrated how easy it was to defeat the Autopilot driver monitoring system.

Consumer Reports said its engineers easily tricked a Tesla Model Y so that it could drive on Autopilot, without anyone in the driver’s seat – a scenario that would present extreme danger if it were repeated on public roads.