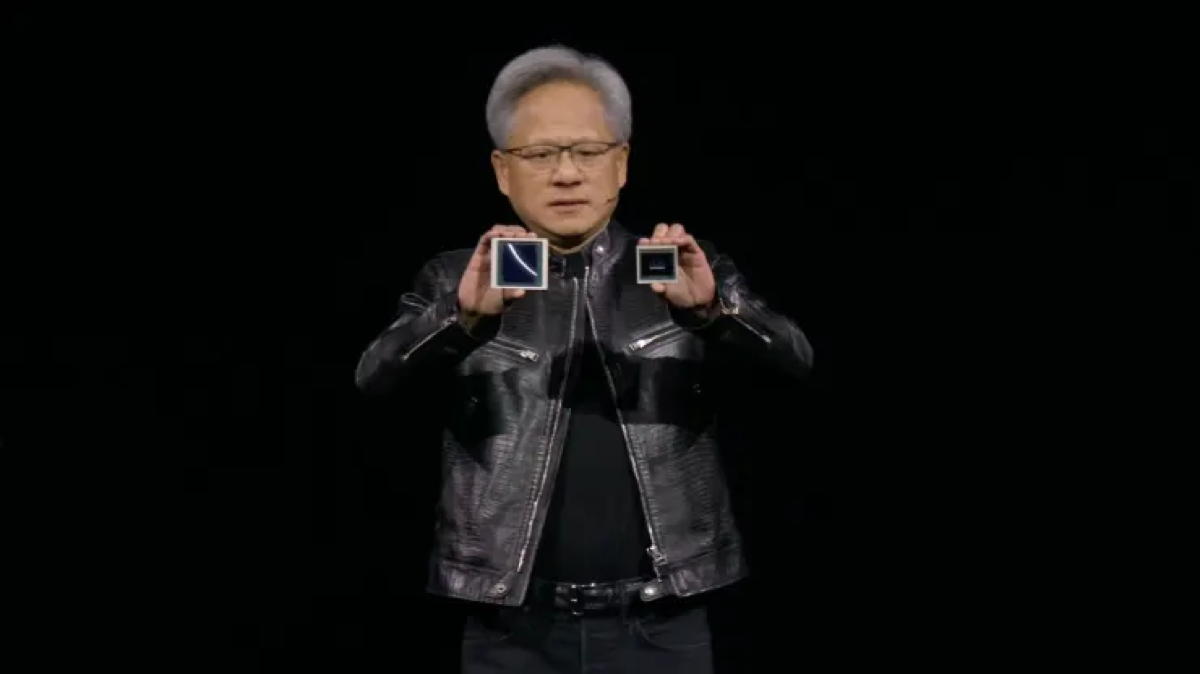

Nvidia chief executive Jensen Huang compares size of 'Blackwell' GPU to that of current 'Hopper' H100 chip at GTC developer conference in March 2024. Image credit: Nvidia

Nvidia on Monday announced a next-generation platform for its market-leading graphics processing units (GPUs) and new software building on its success as a provider of artificial intelligence (AI) hardware platforms.

The announcements were made at at Nvidia’s GPU Technology Conference (GTC) developer show in San Jose, the first to be held in person since 2019.

The new platform, “Blackwell”, powers the B200 GPU that is the successor to the current generation of “Hopper”-based H100.

“Hopper is fantastic, but we need bigger GPUs,” chief executive Jensen Huang told attendees at a keynote speech.

The update is the first since Hopper was announced in 2022, and is the first since the boom in AI triggered by OpenAI’s public launch of ChatGPT in late 2022.

The B200 offers up to 20 petaflops of power, compared to 4 petaflops for the H100, allowing companies to develop larger and more complex AI models, Nvidia said.

The new chip includes a transformer engine specifically built to run transformer-based AIs such as ChatGPT.

Nvidia said cloud providers including Amazon, Google, Microsoft, and Oracle planned to deploy the GB200 Grace Blackwell Superchip, which includes two B200 GPUs along with an ARM-based Grace CPU.

Amazon Web Services plans to build a server cluster of 20,000 GB200 chips, the company said.

The firm said such a system could power a 27 trillion-parameter model, much bigger than the biggest models of today, such as OpenAI’s GPT-4, which reportedly has 1.7 trillion parameters.

The new GPUs will also power the GB200 NVLink 2, with 72 Blackwell GPUs and other Nvidia components designed for AI models.

Along with the new hardware, Nvidia is expanding its software business to capitalise on its leading place with AI developers, who rely on Nvidia’s CUDA software platform.

A new offering called NIM inference microservices provides cloud-native microservices for more than two dozen popular foundation models, including Nvidia-built models, the company said.

Inference refers to the process of running an AI model, as opposed to developing it, and NIM is designed to allow users to operate AI models on a range of Nvidia hardware that they might own, either locally or in the cloud, rather than being restricted to renting capacity from a third-party cloud provider.

AI pioneer OpenAI is interested if Google is forced to sell of its Chrome browser…

Several units within Google notified remote workers jobs will be in jeopardy if they don't…

Leading holders of Trump meme coin receive invitation to private gala dinner with US President,…

TSMC unveils new A14 manufacturing technology that stitches together bigger and faster chips to deal…

Meta has expanded access to its AI assistant in more European countries, for users of…

Privacy concerns continue for China's DeepSeek, after South Korean regulator says platform transferred data without…