Apple is developing its own artificial intelligence (AI) tools to compete with established generative AI players such as OpenAI and Google.

This is according to noted Apple leaker Mark Gruman reporting in a Bloomberg report. However the report states that Apple has yet to devise a clear strategy for releasing the technology to consumers.

According to Gruman, Apple has built its own framework to create large language models – the AI-based systems at the heart of new offerings like ChatGPT and Google’s Bard – according to people with knowledge of the efforts.

Chatbot service

With that foundation, known as “Ajax,” a small team of Apple engineers have reportedly built a chatbot that some call “Apple GPT”.

Access to the chatbot is limited within Apple.

According to Bloomberg, some Apple staffers believe the company is aiming for a significant AI announcement next year.

The report suggested the development signifies that Apple is taking recent advances in AI technology seriously and is considering integrating them into future products.

It should be noted that Apple rarely uses the term “artificial intelligence,” instead opting for the more academic “machine learning.”

With the recently unveiled Vision Pro headset, Apple referred to the augmented reality concept as “spatial computing”.

Late arrival

There is little doubt that Apple is late to the AI party, despite it utilising machine learning in various products, including Siri speech recognition, and the ability of the Photos app to detect faces and pets.

Others such as Microsoft have already integrated OpenAI’s ChatGPT into its software, including its Bing search engine and Office suite.

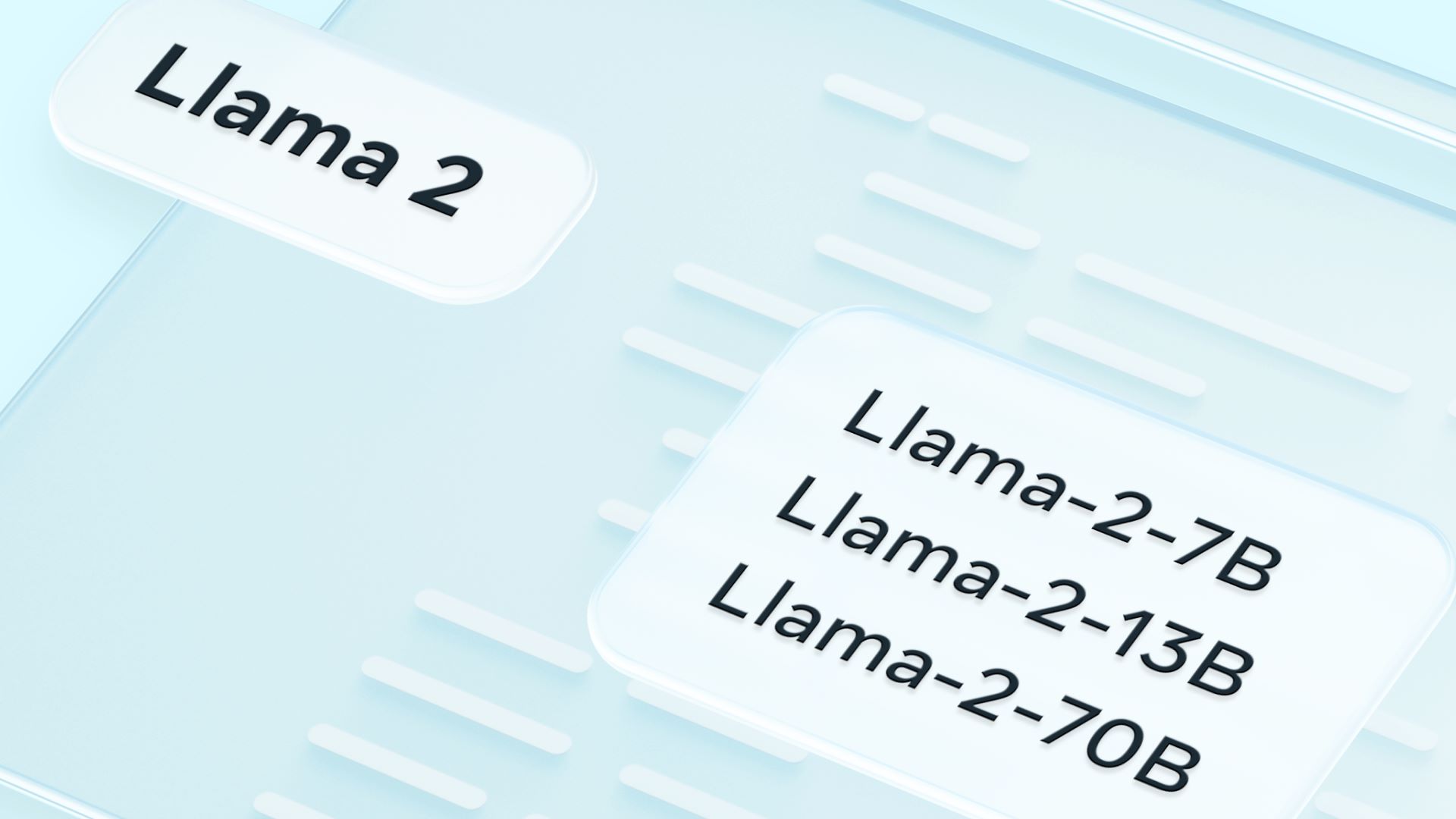

Google meanwhile has integrated its Bard into its search engine, Amazon will offer LLMs (large language models) through AWS and Meta open-sourced a big LLM project this week.

Image credit Meta Platforms

This week Qualcomm said it would work with Meta so its LLMs would work directly on Android devices, instead of on far-away servers in the cloud.