Meta Platform has this week unveiled the second generation of its custom AI processor, amid a slew of AI chip announcements from other tech firms.

Meta in blog post announced version 2 of its Meta Training and Inference Accelerator (MTIA), after it had released the first version back in May 2023.

Meta said the next generation of it’s large-scale infrastructure is being built with AI in mind, including supporting new generative AI (GenAI) products and services, recommendation systems, and advanced AI research.

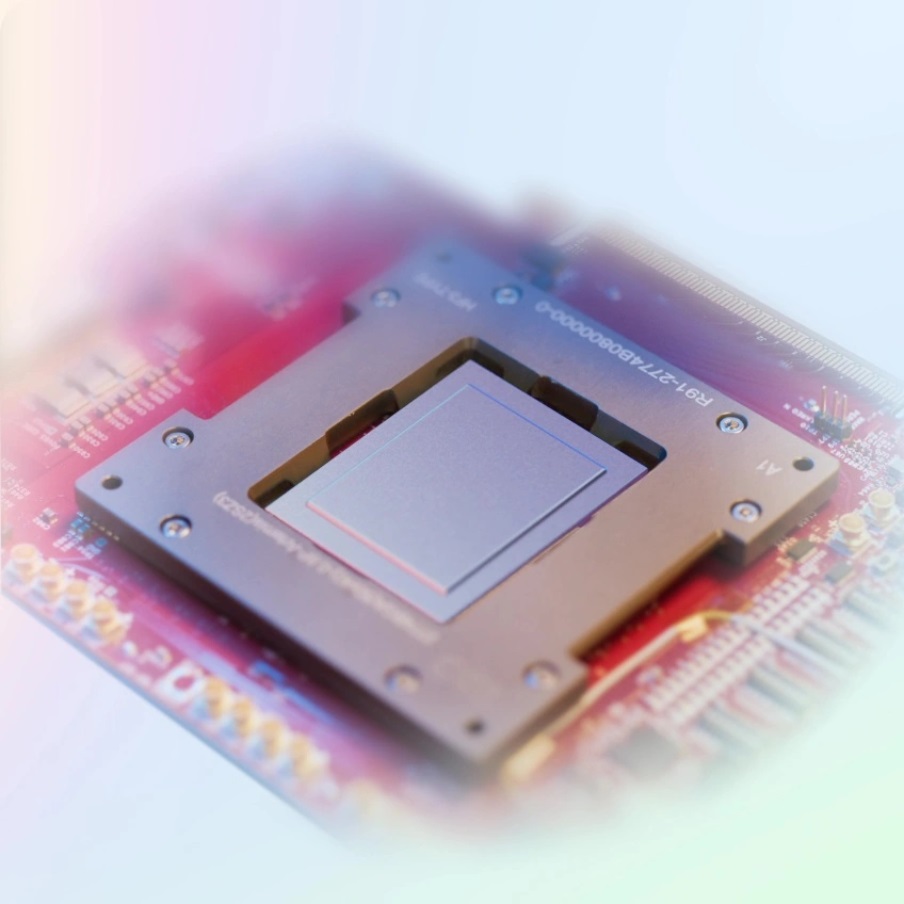

Image credit Meta Platforms

MTIA v2

MTIA v1 was its first-generation AI inference accelerator that was designed in-house with Meta’s AI workloads in mind, namely its deep learning ranking and recommendation models.

“MTIA is a long-term venture to provide the most efficient architecture for Meta’s unique workloads,” it said. “MTIA v1 was an important step in improving the compute efficiency of our infrastructure and better supporting our software developers as they build AI models that will facilitate new and better user experiences.”

But now MTIA v2 (codenamed Artemis) has been designed to work with its current technology infrastructure, but also support and future AI advancements.

According to Meta, the architecture of the new generation MTIA is “fundamentally focused on providing the right balance of compute, memory bandwidth, and memory capacity for serving ranking and recommendation models.”

Meta is obviously hoping MTIA v2 will help lessen its reliance on Nvida’s AI chips and reduce its energy costs overall.

Nvidia is estimated to have a 80 percent share of the AI chip market, it’s H100 and A100 chips serve as a generalised, all-purpose AI processor for many AI customers.

But Nvidia’s AI chips are not cheap. While Nvidia does not disclose H100 prices, each chip can reportedly sell from $16,000 to $100,000 depending on the volume purchased and other factors.

Meta apparently plans to bring its total stock to 350,000 H100s chips in 2024, demonstrating the hefty financial investment required to compete in this sector, and the value of organisation supplying these semiconductors.

Under the hood

Meta said its new accelerator consists of an 8×8 grid of processing elements (PEs).

“These PEs provide significantly increased dense compute performance (3.5x over MTIA v1) and sparse compute performance (7x improvement),” said the firm. “This comes partly from improvements in the architecture associated with pipelining of sparse compute.”

“To support the next-generation silicon we have developed a large, rack-based system that holds up to 72 accelerators,” said Meta. “This consists of three chassis, each containing 12 boards that house two accelerators each. We specifically designed the system so that we could clock the chip at 1.35GHz (up from 800 MHz) and run it at 90 watts compared to 25 watts for our first-generation design.”

Meta said this design ensures it can provide denser capabilities with higher compute, memory bandwidth, and memory capacity.

This density allows Meta to more easily accommodate a broad range of model complexities and sizes.